Estimate user preferences for any intervention attribute

3 principles to dynamically personalize user experiences

I want to tie together three different things I wrote about recently (links in comments):

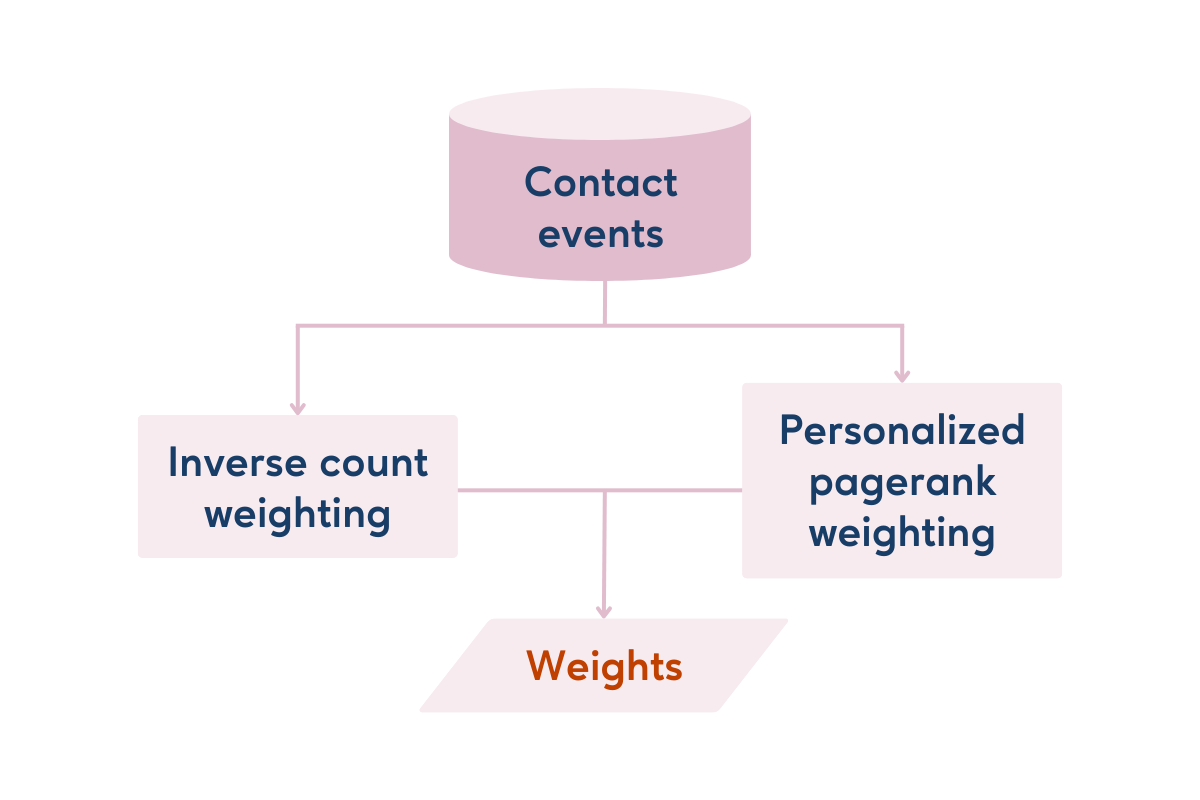

1. We can employ a weighting strategy to convert all instrumented app events - not just funnel events - into useful signal for agentic learners. I personally prefer the weight to be a combination of the average daily inverse count of events, and a pagerank personalized to prioritize end-of-funnel events.

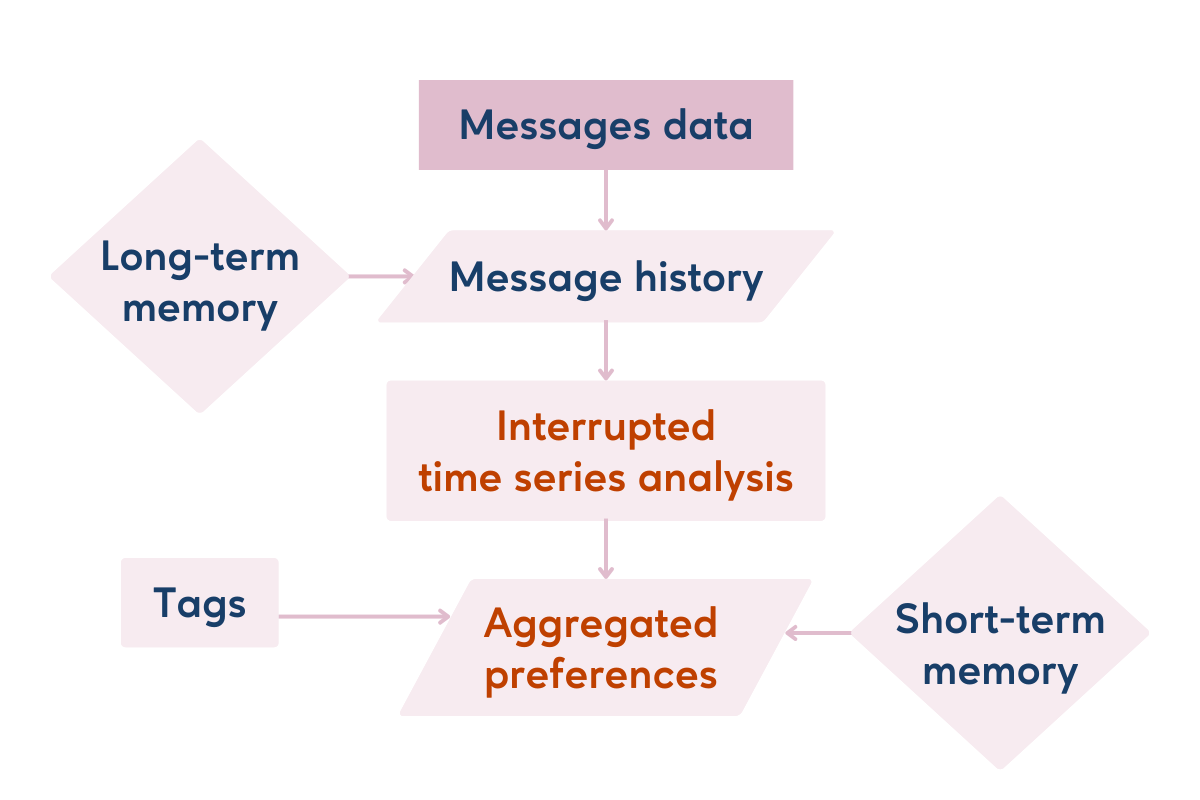

2. We can perform a version of Interrupted Timeseries Analysis (ITS), using each message as the "interruption" and using a sufficiently large and exponentially weighted lookback/lookahead window (I've found 12 hours seems to be a good default window size).

3. We can aggregate judgments over time using another exponential decay to define long-term and short-term memory. Long-term memory is how much history of interventions you decide to aggregate, and the short-term memory defines the half-life of the decay function.

Using these three principles, we can estimate user-level preferences for anything that can be defined as an intervention attribute. The day of week, time of day, hours since triggering event, channel, value proposition, offering category, call to action, and recommendation type instantiated in an individual message - or the interaction of any of those things - are all examples of intervention attributes.

Have 5 different value propositions and want to know which to use on which user? Randomly assign those value props to different users by sending them messages. Use your weighting strategy and ITS to aggregate the signal before and after the intervention, which summarizes the evidence for and against the idea that the message had an impact. Then aggregate that before and after signal over time using the long- and short-term memory parameterization of the attention function. Then convert that aggregated signal into a confidence estimate, the same way you would for an individual message.

So learning preferences is a matter of loading up interventions with tags that represent attributes that could be used to select future interventions, and then aggregating signal over time.

Of course, this doesn't help for users who yield little-to-no signal with which to characterize their interventions. We need a way to infer that from other users. But that's a topic for another post.